StreetSurf: Extending Multi-view Implicit Surface Reconstruction to Street Views

StreetSurf: Extending Multi-view Implicit Surface Reconstruction to Street ViewsWe present a novel multi-view implicit surface reconstruction technique, termed StreetSurf, that is readily applicable to street view images in widely-used autonomous driving datasets, such as Waymo-perception sequences, without necessarily requiring LiDAR data.

As neural rendering research expands rapidly, its integration into street views has started to draw interests. Existing approaches on street views either mainly focus on novel view synthesis with little exploration of the scene geometry, or rely heavily on dense LiDAR data when investigating reconstruction. Neither of them investigates multi-view implicit surface reconstruction, especially under settings without LiDAR data.

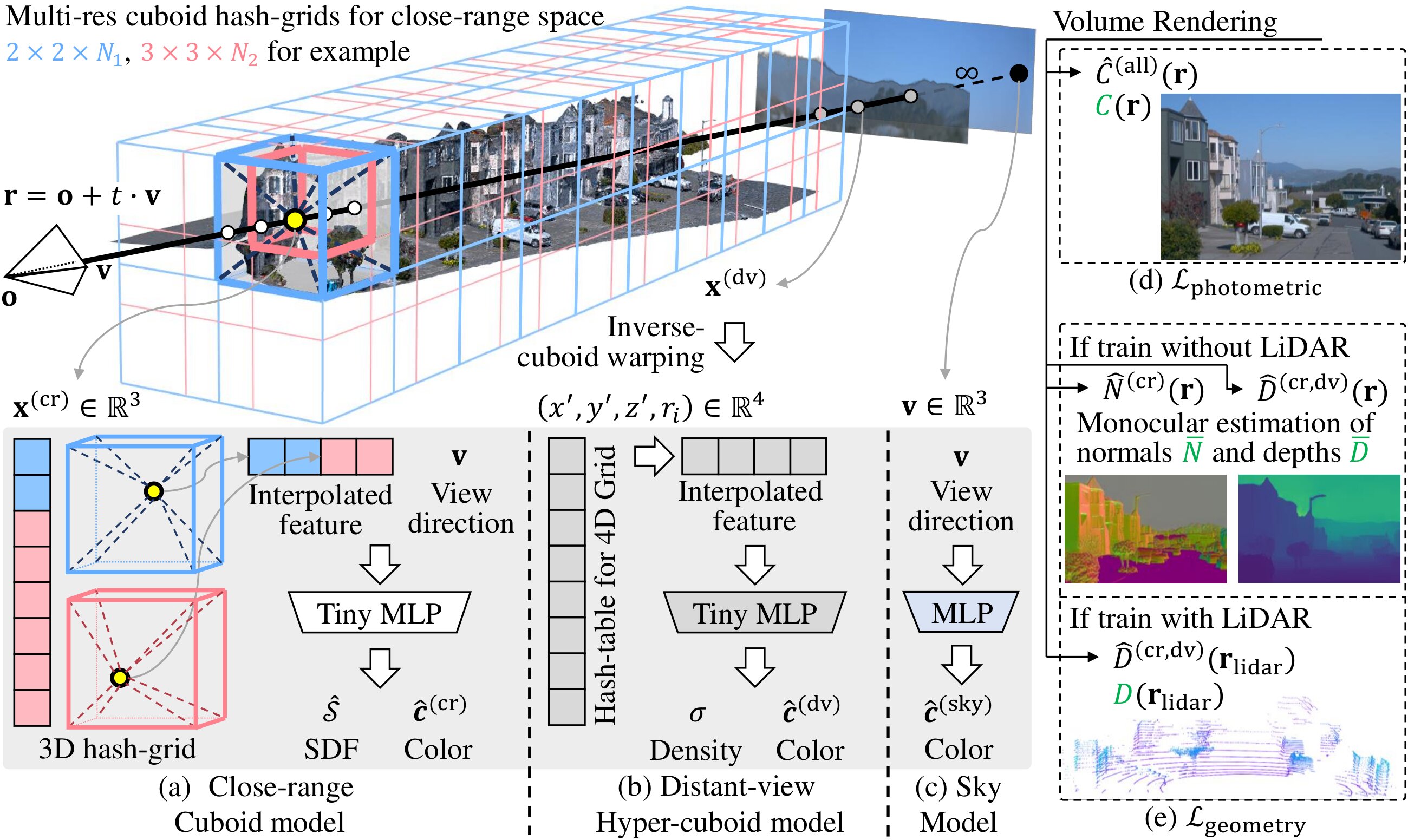

Our method extends prior object-centric neural surface reconstruction techniques to address the unique challenges posed by the unbounded street views that are captured with non-object-centric, long and narrow camera trajectories. We delimit the unbounded space into three parts, close-range, distant-view and sky, with aligned cuboid boundaries, and adapt cuboid/hyper-cuboid hash-grids along with road-surface initialization scheme for finer and disentangled representation. To further address the geometric errors arising from textureless regions and insufficient viewing angles, we adopt geometric priors that are estimated using general purpose monocular models.

Coupled with our implementation of efficient and fine-grained multi-stage ray marching strategy, we achieve state of the art reconstruction quality in both geometry and appearance within only one to two hours of training time with a single RTX3090 GPU for each street view sequence. Furthermore, we demonstrate that the reconstructed implicit surfaces have rich potential for various downstream tasks, including ray tracing and LiDAR simulation.

StreetSurf overview. By using a collection of posed images, along with an optional set of LiDAR data or monocular cues as inputs (or supervisions), we reconstruct the street-view scene through an optimization process. Each scene is divided into three parts according to the viewing distance, namely close-range (cr), distant-view (dv) and sky.

StreetSurf primarily takes posed images as inputs and does not necessariy rely on dense LiDAR inputs. It can work well with dense LiDAR, with only sparse LiDARs, or even without any LiDAR at all. Below results are conducted on a widely-used autonomous driving dataset: Waymo Open Dataset.

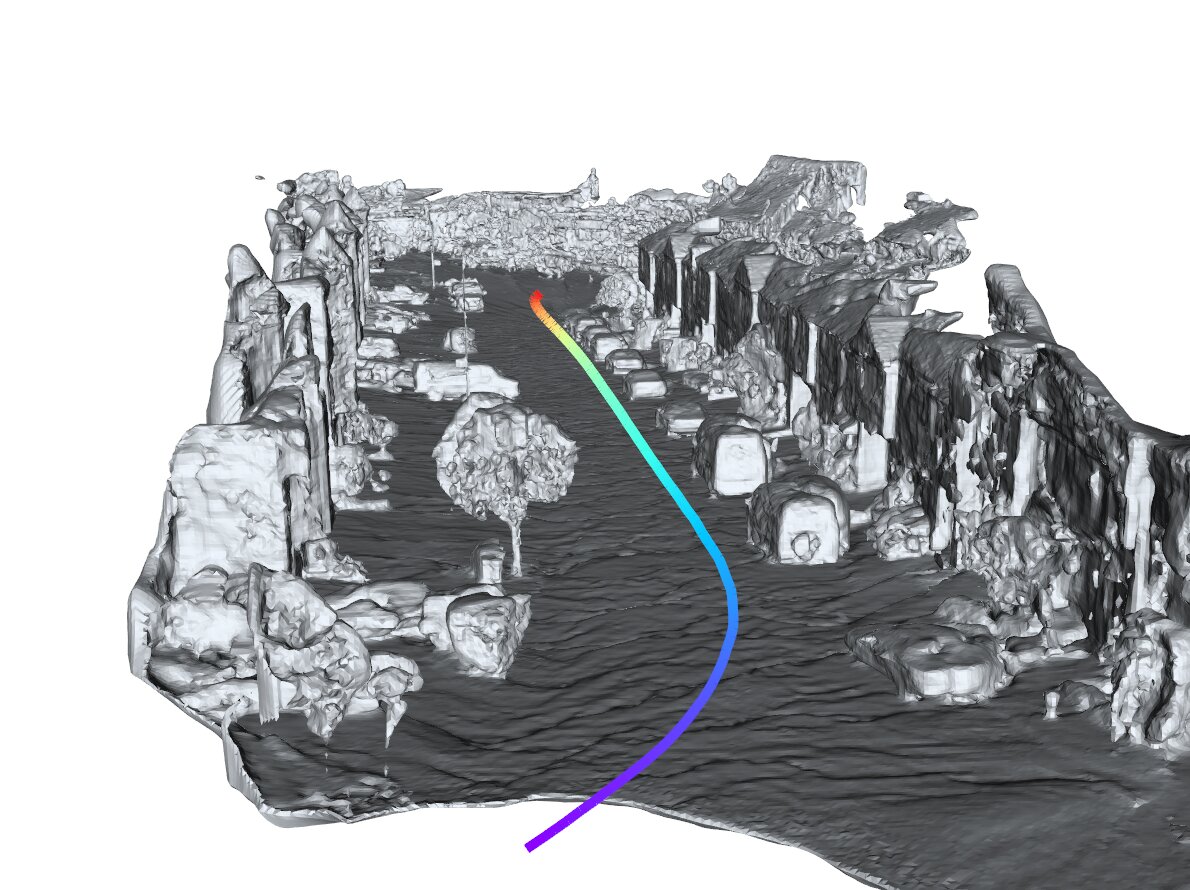

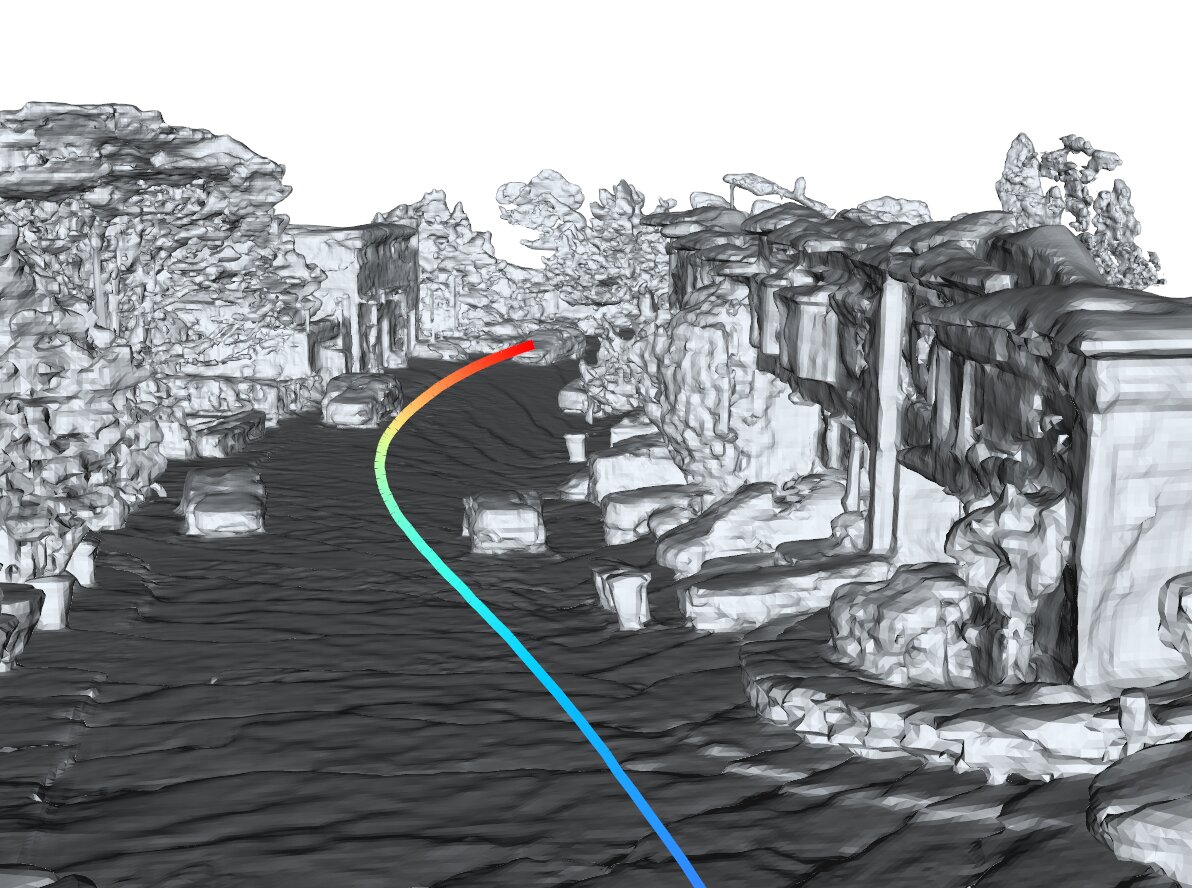

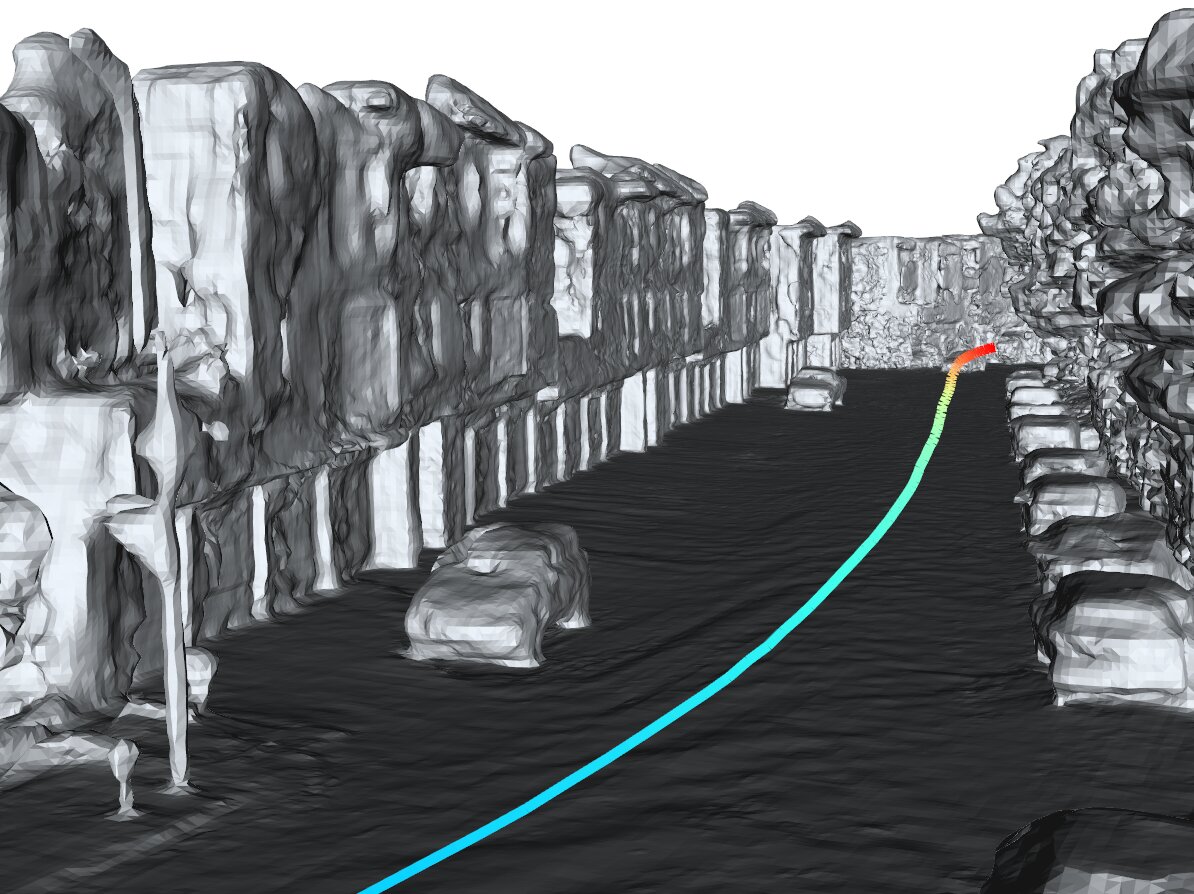

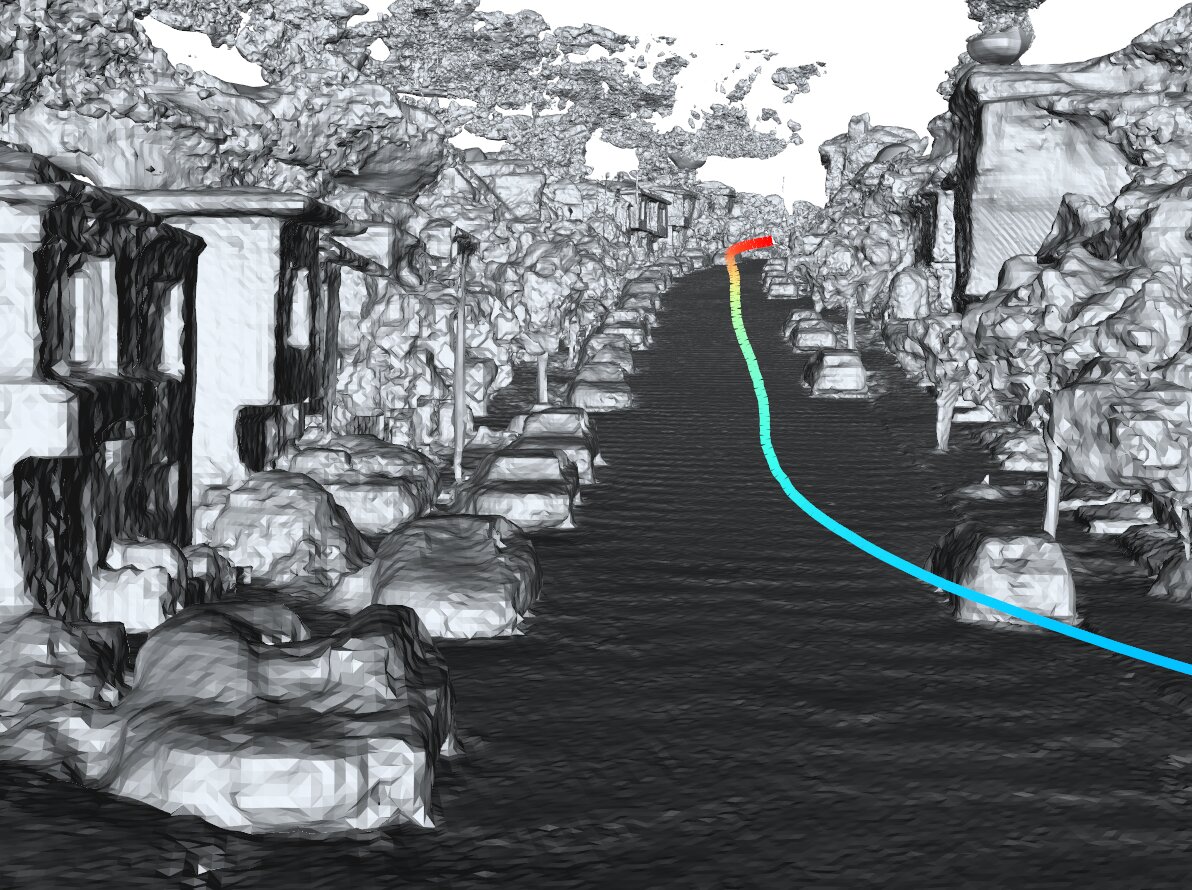

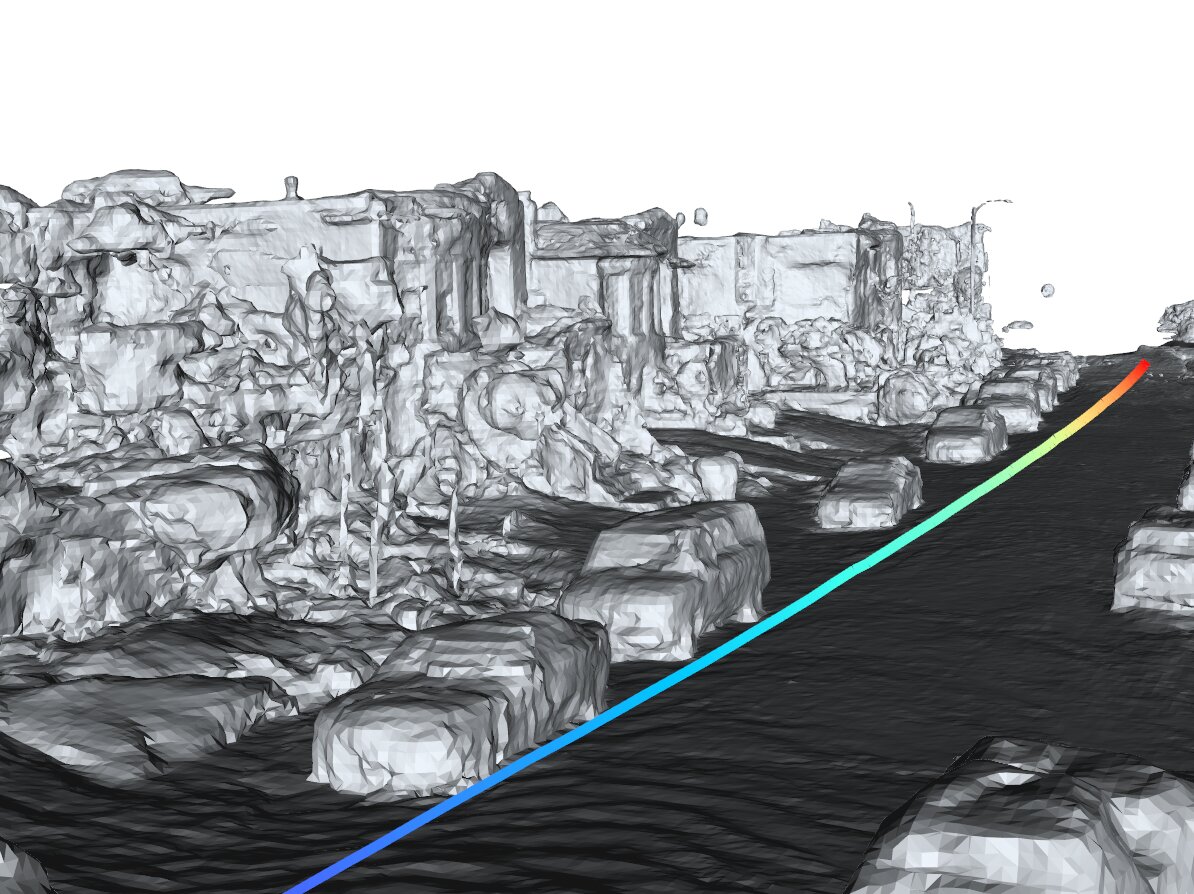

Multi-view reconstruction using posed images and four sparser auxiliary LiDARs, while exluding the dense TOP LiDAR.

seg100613..., marching cubes @ 0.1m

seg153495..., marching cubes @ 0.1m

seg106762..., marching cubes @ 0.1m

seg158686..., marching cubes @ 0.1m

seg152706..., marching cubes @ 0.1m

seg132384..., marching cubes @ 0.1m

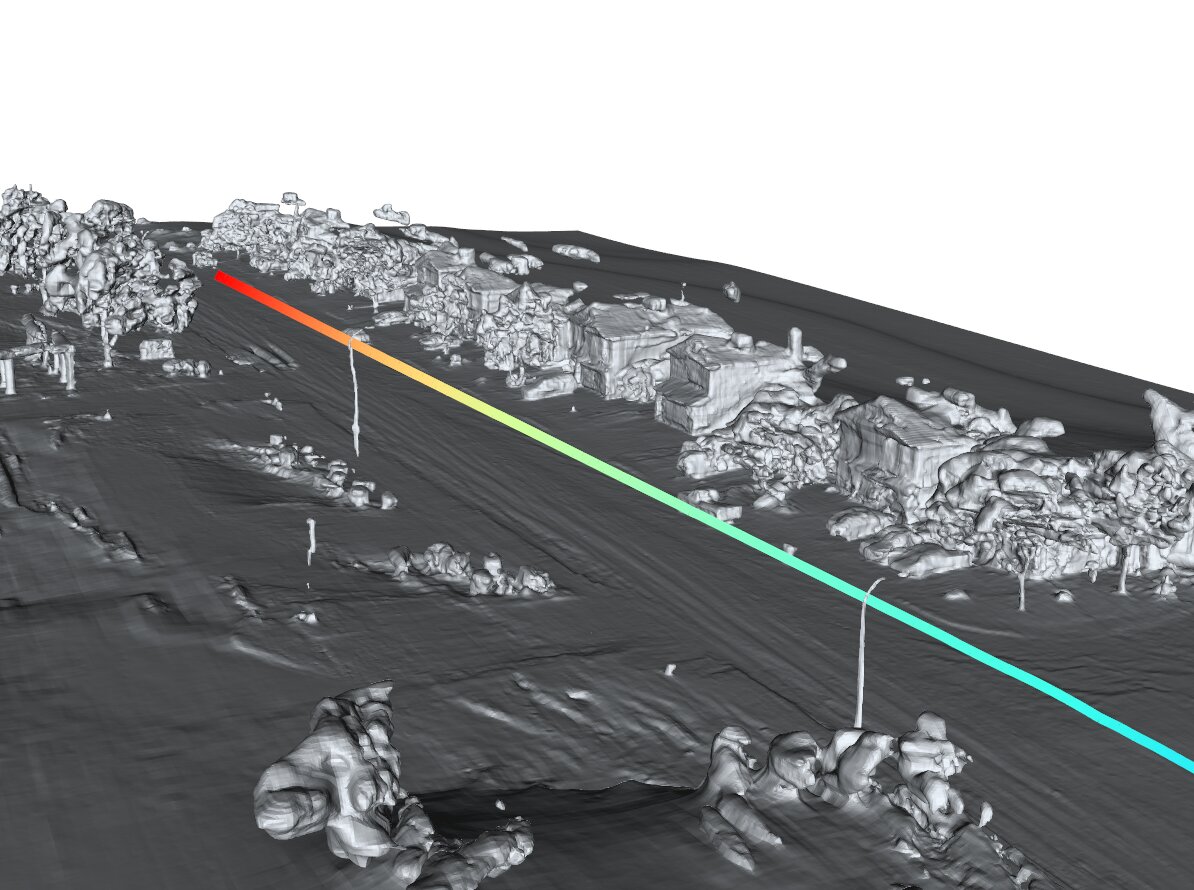

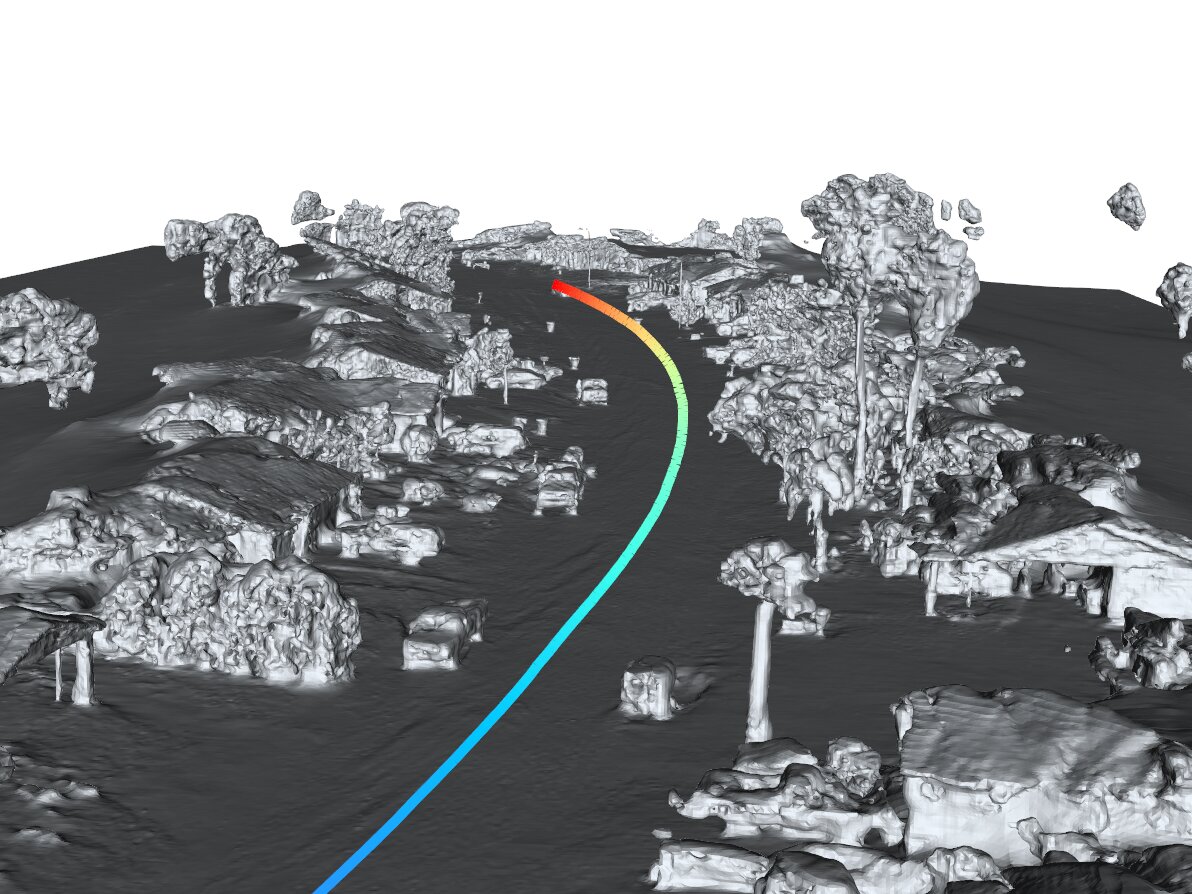

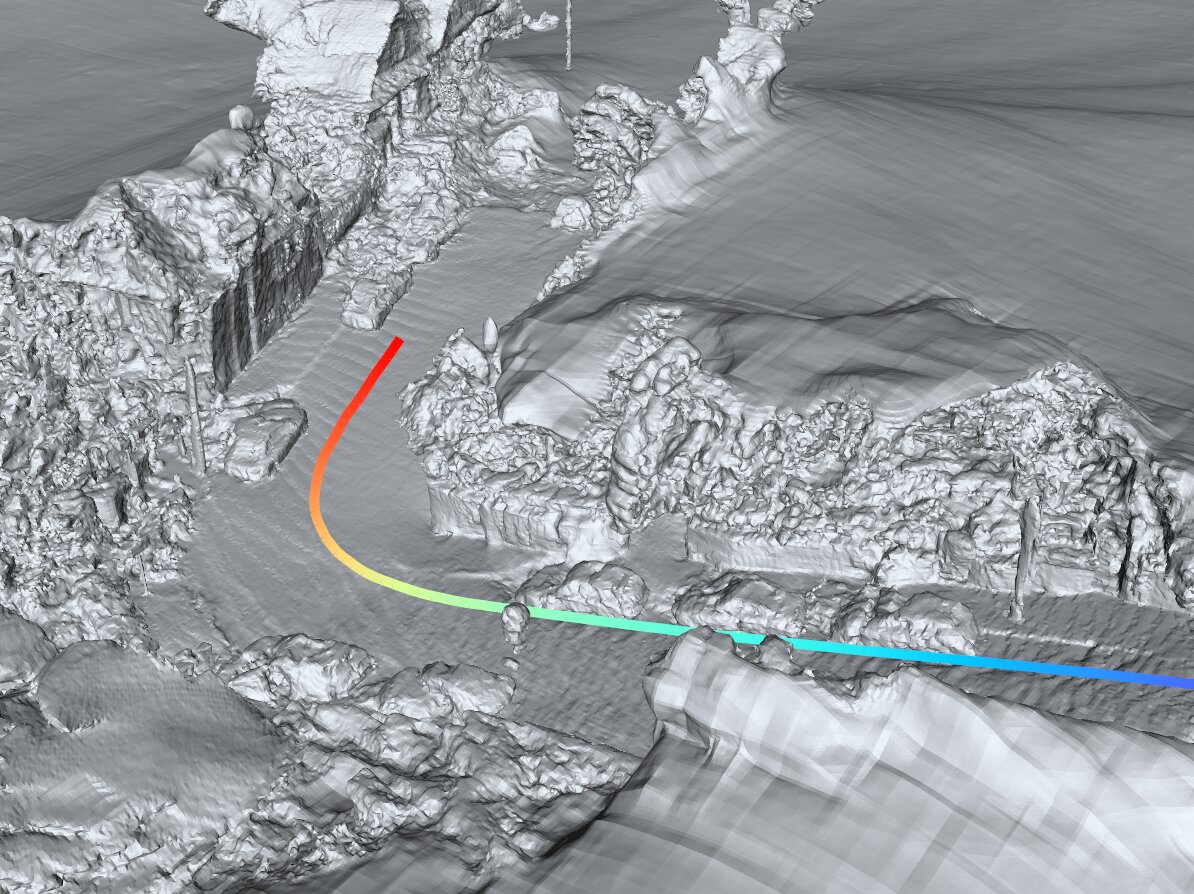

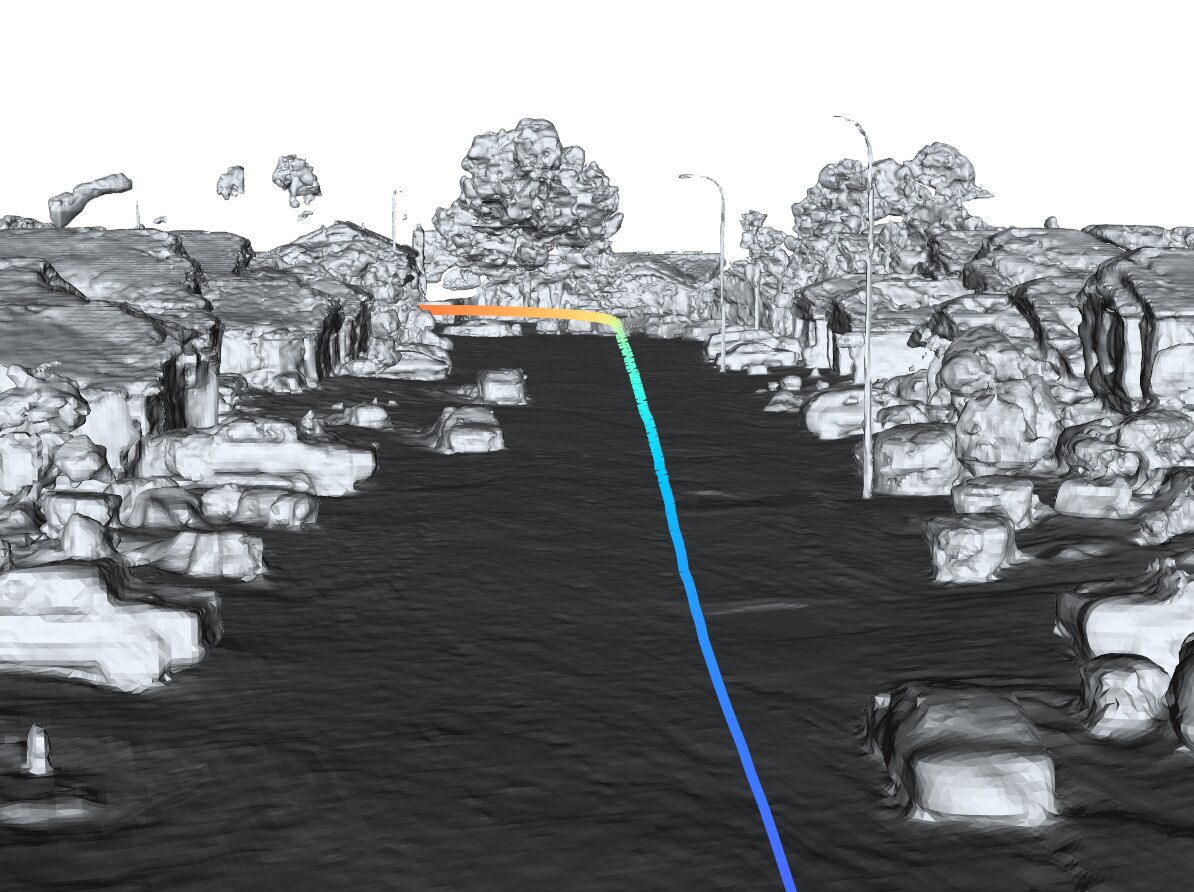

Multi-view reconstruction using only posed images and inferred cues from these images.

seg134763..., marching cubes @ 0.1m

seg163453..., marching cubes @ 0.1m

seg405841..., marching cubes @ 0.1m

seg102751..., marching cubes @ 0.1m

Using StreetSurf, you can obtain an continuous representation (SDF) of scene geometry that has infinitesimal granularity. Subsequently, you can extract high-resolution meshes or occupancy grids out of the reconstructed implicit surface.

Note that the meshes are heavily optimized to reduce size for online loading.

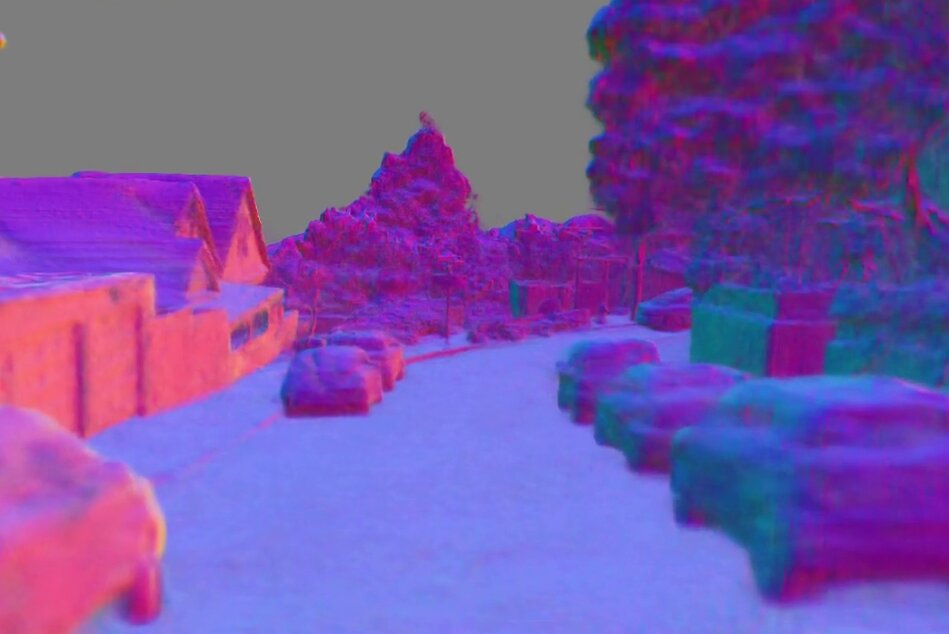

One of the major fallback of volume rendering is that its depth rendering is often indefinite and inaccurate. However with StreetSurf, the rendering process can be naturally guided by the reconstructed implicit surface representation.

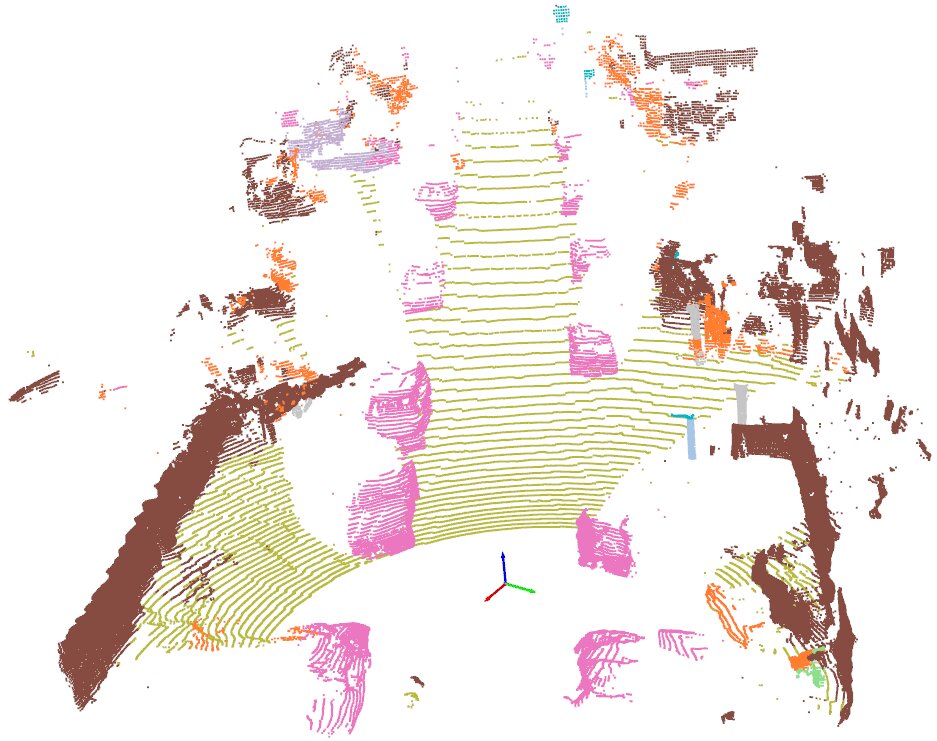

The comparison of ground truth TOP LiDAR pointcloud with the simulated pointcloud projected from the volume-rendered depths on the original LiDAR beams.

Directly feeding the volume-rendered pointcloud into a 3d segmentation model obtained from PCSeg.

Direcly apply sphere-tracing instead of volume rendering.

GT Image

Volume-rendered normals

Volume-rendered image

Sphere-traced image

@article{guo2023streetsurf,

title={StreetSurf: Extending Multi-view Implicit Surface Reconstruction to Street Views},

author={Guo, Jianfei and Deng, Nianchen and Li, Xinyang and Bai, Yeqi and Shi, Botian and Wang, Chiyu and Ding, Chenjing and Wang, Dongliang and Li, Yikang},

journal={arXiv preprint arXiv:2306.04988},

year={2023}

}